Configuring Console2 with Cygwin

When using Windows, Console2 does a great job of managing my console windows, but it’s not intuitive how to configure it with Cygwin (my console of choice). It’s not that hard to simply get Cygwin to open in Console2, but it can be tricky to get it open to a startup directory.

Here are the steps to get Console2 to open to a specific startup directory:

- Launch Console2

- Open settings through Edit > Settings

- Click tabs from the tree on the left

- Click the ‘Add’ button to add a new tab

- Set the title to ‘Cygwin’ (or another appropriate name)

- In the ‘Shell’ field put:

- Replace username with your login name, and the path with the path you want to use relative your home directory. This can also be used to start in other paths on the system.

- This launched cygwin with the command to change the path and then launches bash again so the console window will stay open.

- You don’t have to put anything in ‘Startup dir’

- You should be set to open Cygwin tabs to a specific directory in Console2.

C:\cygwin\bin\bash.exe --login -i -c "cd /cygdrive/c/Users/<username>/<path>/; exec /bin/bash" |

Feel free to do other customizations to the console, I like to make each console have a different color so it’s easy to tell which type of console I’m looking at very quickly.

In: Configuration · Tagged with: console2, cygwin

Crawling the Web With Lynx

Introduction

There are a few reasons you’d want to use a text based browser to crawl the web. For example, it makes it easier to do natural language processing on web pages. I was doing this a year or two ago, and at the time I was unable to find a Python library that would remove HTML reliably. Since there was a looming deadline, I only tried BeautifulSoup and NLTK for removing HTML. They eventually crashed on websites after running for a while.

Keep in mind this information is targeted at general crawling. If you’re crawling a specific site or similarly formatted sites, different techniques can be used. You’ll be able to use the formatting to your advantage, so HTML parsers, such as BeautifulSoup, html5lib, or lxml, become more useful.

Lynx

Using Lynx is pretty straight forward. If you type lynx into a command prompt with lynx installed it will bring up the browser. There is help to get you started, and you’ll be able to browse the Internet. As you can see it does a good job of removing the formatting while keeping the text intact. That’s the information we’re after.

Lynx has several command line parameters that we’ll use to do this. Looking at the command line parameters can be done with the command: lynx -help. The most important command line parameter is -dump. It causes lynx to output the web page to standard out rather than in their browser interface. It allows the information to be captured and processed.

Python Code

def kill_lynx(pid): os.kill(pid, signal.SIGKILL) os.waitpid(-1, os.WNOHANG) print("lynx killed") def get_url(url): web_data = "" cmd = "lynx -dump -nolist -notitle \"{0}\"".format(url) lynx = subprocess.Popen(cmd, shell=True, stdout=subprocess.PIPE) t = threading.Timer(300.0, kill_lynx, args=[lynx.pid]) t.start() web_data = lynx.stdout.read() t.cancel() web_data = web_data.decode("utf-8", 'replace') return web_data |

As you can see in addition to the -dump flag -nolist and -notitle are used too. In most cases the title is included in the text of the website. Another reason for excluding the title is that most of the time it isn’t a complete sentence. The -nolist parameter removes the list of links from the bottom of the dump. I wanted to just parse the information on the page, and on some pages this greatly decreases the amount of text to process.

One other thing to notice is lynx is killed after 300 seconds. While crawling I found that some sites were huge and slow but wouldn’t timeout. Killing lynx helped if it was taking too long on one site. An alternate approach would have been to have different threads running lynx to capture information, so one thread wouldn’t block everything. However, killing it worked well enough for my use case.

Clean Up

Now that the information has been obtained, it needs to be run through some cleanup to help with the natural language processing of it. Before showing the code, let me just say if the project was ongoing I’d look into better ways of doing it. The best way to describe it is, “It worked for me at the time.”

_LINK_BRACKETS = re.compile(r"\[\d+]", re.U) _LEFT_BRACKETS = re.compile(r"\[", re.U) _RIGHT_BRACKETS = re.compile(r"]", re.U) _NEW_LINE = re.compile(r"([^\r\n])\r?\n([^\r\n])", re.U) _SPECIAL_CHARS = re.compile(r"\f|\r|\t|_", re.U) _WHITE_SPACE = re.compile(r" [ ]+", re.U) MS_CHARS = {u"\u2018":"'", u"\u2019":"'", u"\u201c":"\"", u"\u201d":"\"", u"\u2020":" ", u"\u2026":" ", u"\u25BC":" ", u"\u2665":" "} def clean_lynx(input): for i in MS_CHARS.keys(): input = input.replace(i,MS_CHARS[i]) input = _NEW_LINE.sub("\g<1> \g<2>", input) input = _LINK_BRACKETS.sub("", input) input = _LEFT_BRACKETS.sub("(", input) input = _RIGHT_BRACKETS.sub(")", input) input = _SPECIAL_CHARS.sub(" ", input) input = _WHITE_SPACE.sub(" ", input) return input |

This cleanup was done for the natural language processing algorithms. Certain algorithms had problems if there were line breaks in the middle of a sentence. Smart quotes, or curved quotes, were also problematic. I remember square brackets (ie- [ or ]) caused problems with the tagger or sentence tokenizer I was using, so I replaced them with parenthesis. There were a few other rules, and you may need more for your projects.

I hope you find this useful. It should get you started with crawling the web. Be prepared to spent time debugging, and it’s a good idea to spot check. The entire time I was working on the project, I was tweaking different parts of it. The web is pretty good at throwing every case at you.

In: Python · Tagged with: nlp, web crawling

Some SQLite 3.7 Benchmarks

Since I wrote the benchmarks for insertions in my last post, SQLite 3.7 has been released. I figured it’d be interesting to see if 3.7 changed the situation at all.

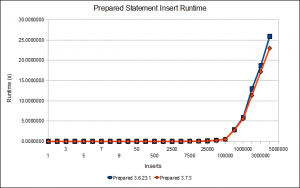

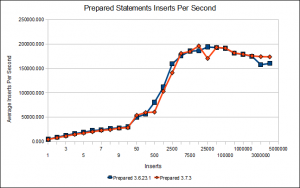

Prepared Statements

The specific versions compared here are 3.6.23.1 and 3.7.3. I ran the prepared statements benchmark as is without changing any source code. Both are using a rollback journal in this case.

As you can see, the new version of SQLite definitely provides better performance. There is a speedup of about 3 seconds.

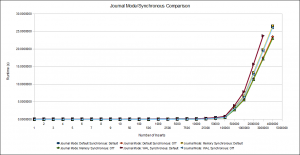

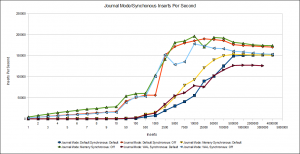

Journal Mode Comparison

One of the main features SQLite 3.7 added was Write Ahead Logging (WAL). The main advantage of write-ahead logging is it allows more concurrent access to the database than a rollback journal. These benchmarks don’t show the true potential of write-ahead logging. The benchmarks are single threaded, and they insert a large amount of data in one transaction. It’s listed as a disadvantage that large transactions can be slow with write-ahead logging, even having the potential to return an error. I wanted to evaluate write-ahead logging as a drop in replacement.

I ran the prepared statements benchmark with the default, memory, and wal settings. I also ran each setting with and without synchronous on. The synchronous setting controls how often sqlite waits for data to be physically written to the hard disk. The default setting is full, which is the safest because it waits for data to be written to the hard disk most frequently. This is compared to synchronous being off, which lets the operating system decide when information should be written to the hard disk. In this case if there is a software crash, it’s more likely the database could become corrupt.

Up until about 100,000 insertions all the journal modes and synchronous settings are about even. After 100,000, the insertion benchmarks with synchronous off are faster than their default synchronous counterparts. Journal mode set to memory and synchronous off offered the best performance for this benchmark.

In: C++ · Tagged with: benchmarks, sqlite

Fast Bulk Inserts into SQLite

Background

Sometimes it’s necessary to get information into a database quickly. SQLite is a light weight database engine that can be easily embedded in applications. This will cover the process of optimizing bulk inserts into an SQLite database. While this article focuses on SQLite some of the techniques shown here will apply to other databases.

All of the following examples insert data into the same table. It’s a table where an ID is the first element followed by three FLOAT values, and then follow by three INTEGER values. You’ll notice the getDouble() and getInt() functions. They return doubles and ints in a predictable manner. I didn’t use random data because different values could potentially add variability to the benchmarks at the end.

Naive Inserts

This is the most basic way to insert information into SQLite. It simply calls sqlite3_exec for each insert in the database.

char buffer[300]; for (unsigned i = 0; i < mVal; i++) { sprintf(buffer, "INSERT INTO example VALUES ('%s', %lf, %lf, %lf, %d, %d, %d)", getID().c_str(), getDouble(), getDouble(), getDouble(), getInt(), getInt(), getInt()); sqlite3_exec(mDb, buffer, NULL, NULL, NULL); } |

Inserts within a Transaction

A transaction is a way to group SQL statements together. If an error is encountered the ON CONFLICT statement can be used to handle that to your liking. Nothing will be written to the SQLite database until either END or COMMIT is encountered to signify the transaction should be closed and written.

char* errorMessage; sqlite3_exec(mDb, "BEGIN TRANSACTION", NULL, NULL, &errorMessage); char buffer[300]; for (unsigned i = 0; i < mVal; i++) { sprintf(buffer, "INSERT INTO example VALUES ('%s', %lf, %lf, %lf, %d, %d, %d)", getID().c_str(), getDouble(), getDouble(), getDouble(), getInt(), getInt(), getInt()); sqlite3_exec(mDb, buffer, NULL, NULL, NULL); } sqlite3_exec(mDb, "COMMIT TRANSACTION", NULL, NULL, &errorMessage); |

PRAGMA Statements

PRAGMA statements control the behavior of SQLite as a whole. They can be used to tweak options such as how often the data is flushed to disk of the size of the cache. These are some that are commonly used for performance. The SQLite documentation fully explains what they do and the implications of using them. For example, synchronous off will cause SQLite to not stop and wait for the data to get written to the hard drive. In the event of a crash or power failure, it is more likely the database could be corrupted.

sqlite3_exec(mDb, "PRAGMA synchronous=OFF", NULL, NULL, &errorMessage); sqlite3_exec(mDb, "PRAGMA count_changes=OFF", NULL, NULL, &errorMessage); sqlite3_exec(mDb, "PRAGMA journal_mode=MEMORY", NULL, NULL, &errorMessage); sqlite3_exec(mDb, "PRAGMA temp_store=MEMORY", NULL, NULL, &errorMessage); |

Prepared Statements

Prepared statements are the recommended way of sending queries to SQLite. Rather than parsing the statement over and over again, the parser only needs to be run once on the statement. According to the documentation, sqlite3_exec is a convenience function that calls sqlite3_prepare_v2(), sqlite3_step(), and then sqlite3_finalize(). In my opinion, the documentation should more explicitly say that prepared statements are the preferred query method. sqlite3_exec() should only be used for one time use queries.

char* errorMessage; sqlite3_exec(mDb, "BEGIN TRANSACTION", NULL, NULL, &errorMessage); char buffer[] = "INSERT INTO example VALUES (?1, ?2, ?3, ?4, ?5, ?6, ?7)"; sqlite3_stmt* stmt; sqlite3_prepare_v2(mDb, buffer, strlen(buffer), &stmt, NULL); for (unsigned i = 0; i < mVal; i++) { std::string id = getID(); sqlite3_bind_text(stmt, 1, id.c_str(), id.size(), SQLITE_STATIC); sqlite3_bind_double(stmt, 2, getDouble()); sqlite3_bind_double(stmt, 3, getDouble()); sqlite3_bind_double(stmt, 4, getDouble()); sqlite3_bind_int(stmt, 5, getInt()); sqlite3_bind_int(stmt, 6, getInt()); sqlite3_bind_int(stmt, 7, getInt()); if (sqlite3_step(stmt) != SQLITE_DONE) { printf("Commit Failed!\n"); } sqlite3_reset(stmt); } sqlite3_exec(mDb, "COMMIT TRANSACTION", NULL, NULL, &errorMessage); sqlite3_finalize(stmt); |

Storing Data as Binary Blob

Up until now, most of the optimizations have been pretty much the standard advice that you get when looking into bulk insert optimization. If you’re not running queries on some of the data, it’s possible to convert it to binary and store it as a blob. While it’s not advised to just throw everything into a blob and put it in the database, putting data that would be pulled and used together into a binary blob can make sense in some situations.

For example, if you have a point class (x, y, z) with REAL values, it might make sense to store them in a blob rather than three separate fields in row. That’s only if you don’t need to make queries on the data though. The benefit of this technique increases as more fields are converted into larger blobs.

char* errorMessage; sqlite3_exec(mDb, "BEGIN TRANSACTION", NULL, NULL, &errorMessage); char buffer[] = "INSERT INTO example VALUES (?1, ?2, ?3, ?4, ?5)"; sqlite3_stmt* stmt; sqlite3_prepare_v2(mDb, buffer, strlen(buffer), &stmt, NULL); for (unsigned i = 0; i < mVal; i++) { std::string id = getID(); sqlite3_bind_text(stmt, 1, id.c_str(), id.size(), SQLITE_STATIC); char dblBuffer[24]; double d[] = {getDouble(), getDouble(), getDouble()}; memcpy(dblBuffer, (char*)&d, sizeof(d)); sqlite3_bind_blob(stmt, 2, dblBuffer, 24, SQLITE_STATIC); sqlite3_bind_int(stmt, 3, getInt()); sqlite3_bind_int(stmt, 4, getInt()); sqlite3_bind_int(stmt, 5, getInt()); int retVal = sqlite3_step(stmt); if (retVal != SQLITE_DONE) { printf("Commit Failed! %d\n", retVal); } sqlite3_reset(stmt); } sqlite3_exec(mDb, "COMMIT TRANSACTION", NULL, NULL, &errorMessage); sqlite3_finalize(stmt); |

Note: I just used memcpy here, but this would have issues going between big and little endian systems. If that’s necessary, it would be a good idea to serialize the data using a serialization library (ie – protocol buffers or msgpack).

Performance

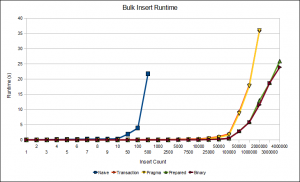

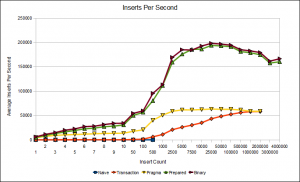

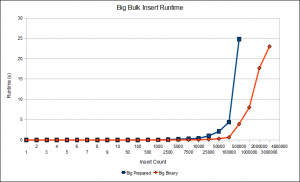

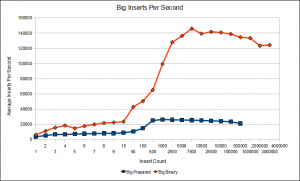

I ran benchmarks to test the performance of each method of inserting data. Take note that the x axis does not scale linearly, it most closely matches a logarithmic scale. The inserts per second graph was obtained by taking the number of inserts and dividing it by the total runtime.

After running the first benchmark, I wanted to show how storing data in binary can make a difference. I ran it again, but instead of storing only three doubles, I stored 24 doubles. I assumed order mattered, so for the benchmark that is not stored in a binary blob, I made a separate table with ID and order columns. This way both versions captured the same information.

Good luck with your database inserts.

Fast Bulk Inserts into SQLite

Background

Sometimes it’s necessary to get information into a database quickly. SQLite[http://sqlite.org/] is a light weight database engine that can be easily embedded in applications. This will cover the process of optimizing bulk inserts into an SQLite database. While this article focuses on SQLite some of the techniques shown here will apply to other databases.

Naive Inserts

This is the most basic way to insert information into SQLite. It simply calls sqlite3_exec[http://www.sqlite.org/c3ref/exec.html] for each insert in the database.

[insert code here]

Inserts within a Transaction

A transaction is a way to group SQL statements together. If an error is encountered the ON CONFLICT statement can be used to handle that to your liking. Nothing will be written to the SQLite database until either END or COMMIT is encountered to signify the transaction should be written and closed.

[insert code here]

PRAGMA Statements

PRAGMA statements[http://sqlite.org/pragma.html] control the behavior of SQLite as a whole. They can be used to tweak options such as how often the data is flushed to disk of the size of the cache.

[insert code here]

Prepared Statements

Prepared statements[http://sqlite.org/c3ref/prepare.html] are the recommended way of sending queries to SQLite. Rather than parsing the statement over and over again, the parser only needs to be run once on the statement. In all honesty, the documentation for sqlite3_exec should say not to use it at all in favor of prepared statements. They are not only faster on inserts, but across the board for all SQL statements.

[insert cod here]

Storing Data as Binary Blob

Up until now, most of the optimizations have been pretty much the standard advice that you get when looking into bulk insert optimization. If you’re not running queries on some of the data, it’s possible to convert it to binary and store it as a blob. While it’s not advised to just throw everything into a blob and put it in the database, putting data that would be pulled and used together into a binary blob can make sense in some situations.

For example, if you have a point class (x, y, z) with REAL values, it might make sense to store them in a blob rather than three separate fields in row. That’s only if you don’t need to make queries on the data though. The benefits of this technique increase as more fields are converted into larger blobs.

[insert code here]

Note: I just do a memcpy here, but this would have issues going between big and little endian systems. If that’s necessary, it would be a good idea to serialize the data using a serialization library (ie – protocol buffers[http://code.google.com/apis/protocolbuffers/docs/overview.html], msgpack[http://msgpack.org/], thrift[http://incubator.apache.org/thrift/]).

Performance

Fast Bulk Inserts into SQLite

Background

Sometimes it’s necessary to get information into a database quickly. SQLite[http://sqlite.org/] is a light weight database engine that can be easily embedded in applications. This will cover the process of optimizing bulk inserts into an SQLite database. While this article focuses on SQLite some of the techniques shown here will apply to other databases.

Naive Inserts

This is the most basic way to insert information into SQLite. It simply calls sqlite3_exec[http://www.sqlite.org/c3ref/exec.html] for each insert in the database.

[insert code here]

Inserts within a Transaction

A transaction is a way to group SQL statements together. If an error is encountered the ON CONFLICT statement can be used to handle that to your liking. Nothing will be written to the SQLite database until either END or COMMIT is encountered to signify the transaction should be written and closed.

[insert code here]

PRAGMA Statements

PRAGMA statements[http://sqlite.org/pragma.html] control the behavior of SQLite as a whole. They can be used to tweak options such as how often the data is flushed to disk of the size of the cache.

[insert code here]

Prepared Statements

Prepared statements[http://sqlite.org/c3ref/prepare.html] are the recommended way of sending queries to SQLite. Rather than parsing the statement over and over again, the parser only needs to be run once on the statement. In all honesty, the documentation for sqlite3_exec should say not to use it at all in favor of prepared statements. They are not only faster on inserts, but across the board for all SQL statements.

[insert cod here]

Storing Data as Binary Blob

Up until now, most of the optimizations have been pretty much the standard advice that you get when looking into bulk insert optimization. If you’re not running queries on some of the data, it’s possible to convert it to binary and store it as a blob. While it’s not advised to just throw everything into a blob and put it in the database, putting data that would be pulled and used together into a binary blob can make sense in some situations.

For example, if you have a point class (x, y, z) with REAL values, it might make sense to store them in a blob rather than three separate fields in row. That’s only if you don’t need to make queries on the data though. The benefits of this technique increase as more fields are converted into larger blobs.

[insert code here]

Note: I just do a memcpy here, but this would have issues going between big and little endian systems. If that’s necessary, it would be a good idea to serialize the data using a serialization library (ie – protocol buffers[http://code.google.com/apis/protocolbuffers/docs/overview.html], msgpack[http://msgpack.org/], thrift[http://incubator.apache.org/thrift/]).

Performance

In: C++ · Tagged with: benchmarks, optimization, sqlite

Nightly Benchmarks: Tracking Results with Codespeed

Background

Codespeed is a project for tracking performance. I discovered it when the PyPy project started using Codespeed to track performance. Since then development has been done to make its setup easier and provide more display options.

Anyway, two posts ago I talked about running nightly benchmarks with Hudson. Then in the previous post I discussed passing parameters between builds in Hudson. Both of these posts are worth reading before trying to setup Hudson with Codespeed.

Codespeed Installation/Configuration

Django Quickstart

Codespeed is built on Python and Django. Some basic knowledge of Django is needed in order to get everything up and running. Don’t worry, it’s not that hard to learn the bit that is needed. manage.py is all you need to know about to setup and view Codespeed. There is information about deploying Django to a real web server, but I won’t be covering that here.

Here are the commands to get Django running:

syncdb is used to initialize the database with the necessary tables. It will also setup an admin account. With the sqlite3 database selected, it will create the database file when this command is run.

The command is:

python manage.py syncdb

The next command is the runserver command. This runs the built-in django server. In the documentation they state you’re not supposed to use it in a production environment, so make sure to deploy to a production environment if you plan to host it on the Internet or high traffic network.

The command is:

python manage.py runserver 0.0.0.0:9000

By default the server will run on 127.0.0.1:8000. Setting the IP to 0.0.0.0 allows connections from any computer. This works well if you’re on a local area network and want to set it up on a VM over SSH, but still be able to access the web interface from your computer. The port is the port for the server to run on. To view Codespeed, point your browser at 127.0.0.1:9000 or the IP of the machine it’s on with the colon 9000.

Django has many settings that may or may not need to be tweaked for your environment. They can be set through the speedcenter/settings.py file.

Codespeed Setup/Settings

Now for setting up the actual Codespeed server. First check it out using git. The clone command is:

git clone http://github.com/tobami/codespeed.git

The settings file is speedcenter/codespeed/settings.py.

Most of the default values will work fine. They’re mostly for setting default values for various things in the interface.

One thing that does need to be configured is the environment. Start by running the syncdb command and then run the server using runserver. Now that the server is running, browse to the admin interface. If you ran the server on port 9000, point your browser at http://127.0.0.1:9000/admin. Login using the username and password you created during the syncdb call. A Codespeed environment must be created manually. The environment is the machine you’re running the benchmarks on. After logging in, click Add next to the Environment label. Fill in the various fields and remember the name of it. Save it when you’re done. The name will be used later when submitting benchmark data to Codespeed.

Submitting Benchmarks

This will pick up where my last tutorial left off. The benchmarks were running as a nightly job in Hudson. Sending benchmark data to Codespeed will take a bit of programming. I’m going to continue the example with JRuby, so the benchmarks and submission process are written in Ruby.

In order to submit benchmarks information must be transferred from the JRuby build job to the Ruby Benchmarks job. My last post discussed how to transfer parameters between jobs. Using the Parameterized Trigger Plugin and passing extra parameters using a properties file will allow you to get all the necessary parameters to the benchmarks job.

The required information for submitting a benchmark result to Codespeed includes:

- commitid – The id of the commit, which could either be a git/mercurial hashcode or an svn revision number.

- project – The name of the project to save.

- executable – The name of the executable.

- benchmark – The name of the benchmark.

- environment – This is the name of the environment you created earlier. It must be the name of an existing environment.

- result_value – The runtime of the benchmark. You can configure what units a benchmark has through the admin interface. Default is seconds.

This information can be included but is optional:

- std_dev – The standard deviation of the results of the benchmarks.

- min

- max

- branch – The branch corresponding to this benchmark in the SCM repository.

- result_date – The timestamp of the commit in the form “%Y-%m-%d %H:%M”

The above information is passed to Codespeed through an encoded URL. Have the URL point to http://127.0.0.1:9000/results/add/ and encode the parameters for sending. For the JRuby benchmarks, the following parameters are sent from the JRuby job to the to the ruby benchmarks job.

COMMIT_ID=$(git rev-parse HEAD) COMMIT_TIME=$(git log -1 --pretty=\"format:%ad\") RUBY_PATH=$WORKSPACE/bin/jruby REPO_URL=git://github.com/jruby/jruby.git

The other fields are derived from the benchmarks job itself.

Here is the source code for submission through Ruby:

output = {} canonical_name = doc["name"].gsub '//', '/' output['commitid'] = commitid output['project'] = BASE_VM output['branch'] = branch output['executable'] = BASE_VM output['benchmark'] = File.basename(canonical_name) output['environment'] = environment output['result_value'] = doc["mean"] output['std_dev'] = doc["standard_deviation"] output['result_date'] = commit_time res = Net::HTTP.post_form(URI.parse("#{server}/result/add/"), output) puts res.body |

It’s a good idea to always print out the response as it will contain debug information. There is an example of how to submit benchmarks to Codespeed using Python in the Codespeed repository in the tools directory.

Viewing Results

After results are in the the Codespeed database, you can view the data through the web interface. Direct a browser at http://127.0.0.1:9000. The changes view shows the trend over the last revisions. The timeline view allows you to see a graph of recent revisions, and the newly added comparison view will compare different executables running the same benchmark.

In: Uncategorized · Tagged with: benchmarks, continuous integration, jruby