Fast Bulk Inserts into SQLite

Background

Sometimes it’s necessary to get information into a database quickly. SQLite is a light weight database engine that can be easily embedded in applications. This will cover the process of optimizing bulk inserts into an SQLite database. While this article focuses on SQLite some of the techniques shown here will apply to other databases.

All of the following examples insert data into the same table. It’s a table where an ID is the first element followed by three FLOAT values, and then follow by three INTEGER values. You’ll notice the getDouble() and getInt() functions. They return doubles and ints in a predictable manner. I didn’t use random data because different values could potentially add variability to the benchmarks at the end.

Naive Inserts

This is the most basic way to insert information into SQLite. It simply calls sqlite3_exec for each insert in the database.

char buffer[300]; for (unsigned i = 0; i < mVal; i++) { sprintf(buffer, "INSERT INTO example VALUES ('%s', %lf, %lf, %lf, %d, %d, %d)", getID().c_str(), getDouble(), getDouble(), getDouble(), getInt(), getInt(), getInt()); sqlite3_exec(mDb, buffer, NULL, NULL, NULL); } |

Inserts within a Transaction

A transaction is a way to group SQL statements together. If an error is encountered the ON CONFLICT statement can be used to handle that to your liking. Nothing will be written to the SQLite database until either END or COMMIT is encountered to signify the transaction should be closed and written.

char* errorMessage; sqlite3_exec(mDb, "BEGIN TRANSACTION", NULL, NULL, &errorMessage); char buffer[300]; for (unsigned i = 0; i < mVal; i++) { sprintf(buffer, "INSERT INTO example VALUES ('%s', %lf, %lf, %lf, %d, %d, %d)", getID().c_str(), getDouble(), getDouble(), getDouble(), getInt(), getInt(), getInt()); sqlite3_exec(mDb, buffer, NULL, NULL, NULL); } sqlite3_exec(mDb, "COMMIT TRANSACTION", NULL, NULL, &errorMessage); |

PRAGMA Statements

PRAGMA statements control the behavior of SQLite as a whole. They can be used to tweak options such as how often the data is flushed to disk of the size of the cache. These are some that are commonly used for performance. The SQLite documentation fully explains what they do and the implications of using them. For example, synchronous off will cause SQLite to not stop and wait for the data to get written to the hard drive. In the event of a crash or power failure, it is more likely the database could be corrupted.

sqlite3_exec(mDb, "PRAGMA synchronous=OFF", NULL, NULL, &errorMessage); sqlite3_exec(mDb, "PRAGMA count_changes=OFF", NULL, NULL, &errorMessage); sqlite3_exec(mDb, "PRAGMA journal_mode=MEMORY", NULL, NULL, &errorMessage); sqlite3_exec(mDb, "PRAGMA temp_store=MEMORY", NULL, NULL, &errorMessage); |

Prepared Statements

Prepared statements are the recommended way of sending queries to SQLite. Rather than parsing the statement over and over again, the parser only needs to be run once on the statement. According to the documentation, sqlite3_exec is a convenience function that calls sqlite3_prepare_v2(), sqlite3_step(), and then sqlite3_finalize(). In my opinion, the documentation should more explicitly say that prepared statements are the preferred query method. sqlite3_exec() should only be used for one time use queries.

char* errorMessage; sqlite3_exec(mDb, "BEGIN TRANSACTION", NULL, NULL, &errorMessage); char buffer[] = "INSERT INTO example VALUES (?1, ?2, ?3, ?4, ?5, ?6, ?7)"; sqlite3_stmt* stmt; sqlite3_prepare_v2(mDb, buffer, strlen(buffer), &stmt, NULL); for (unsigned i = 0; i < mVal; i++) { std::string id = getID(); sqlite3_bind_text(stmt, 1, id.c_str(), id.size(), SQLITE_STATIC); sqlite3_bind_double(stmt, 2, getDouble()); sqlite3_bind_double(stmt, 3, getDouble()); sqlite3_bind_double(stmt, 4, getDouble()); sqlite3_bind_int(stmt, 5, getInt()); sqlite3_bind_int(stmt, 6, getInt()); sqlite3_bind_int(stmt, 7, getInt()); if (sqlite3_step(stmt) != SQLITE_DONE) { printf("Commit Failed!\n"); } sqlite3_reset(stmt); } sqlite3_exec(mDb, "COMMIT TRANSACTION", NULL, NULL, &errorMessage); sqlite3_finalize(stmt); |

Storing Data as Binary Blob

Up until now, most of the optimizations have been pretty much the standard advice that you get when looking into bulk insert optimization. If you’re not running queries on some of the data, it’s possible to convert it to binary and store it as a blob. While it’s not advised to just throw everything into a blob and put it in the database, putting data that would be pulled and used together into a binary blob can make sense in some situations.

For example, if you have a point class (x, y, z) with REAL values, it might make sense to store them in a blob rather than three separate fields in row. That’s only if you don’t need to make queries on the data though. The benefit of this technique increases as more fields are converted into larger blobs.

char* errorMessage; sqlite3_exec(mDb, "BEGIN TRANSACTION", NULL, NULL, &errorMessage); char buffer[] = "INSERT INTO example VALUES (?1, ?2, ?3, ?4, ?5)"; sqlite3_stmt* stmt; sqlite3_prepare_v2(mDb, buffer, strlen(buffer), &stmt, NULL); for (unsigned i = 0; i < mVal; i++) { std::string id = getID(); sqlite3_bind_text(stmt, 1, id.c_str(), id.size(), SQLITE_STATIC); char dblBuffer[24]; double d[] = {getDouble(), getDouble(), getDouble()}; memcpy(dblBuffer, (char*)&d, sizeof(d)); sqlite3_bind_blob(stmt, 2, dblBuffer, 24, SQLITE_STATIC); sqlite3_bind_int(stmt, 3, getInt()); sqlite3_bind_int(stmt, 4, getInt()); sqlite3_bind_int(stmt, 5, getInt()); int retVal = sqlite3_step(stmt); if (retVal != SQLITE_DONE) { printf("Commit Failed! %d\n", retVal); } sqlite3_reset(stmt); } sqlite3_exec(mDb, "COMMIT TRANSACTION", NULL, NULL, &errorMessage); sqlite3_finalize(stmt); |

Note: I just used memcpy here, but this would have issues going between big and little endian systems. If that’s necessary, it would be a good idea to serialize the data using a serialization library (ie – protocol buffers or msgpack).

Performance

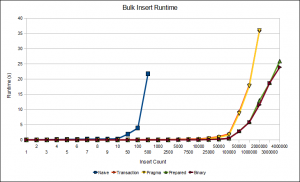

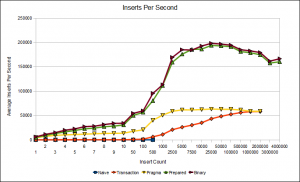

I ran benchmarks to test the performance of each method of inserting data. Take note that the x axis does not scale linearly, it most closely matches a logarithmic scale. The inserts per second graph was obtained by taking the number of inserts and dividing it by the total runtime.

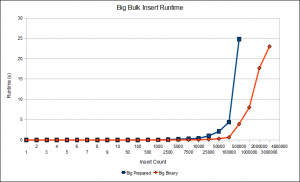

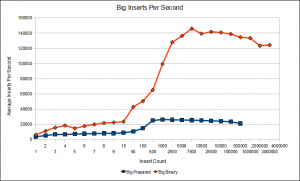

After running the first benchmark, I wanted to show how storing data in binary can make a difference. I ran it again, but instead of storing only three doubles, I stored 24 doubles. I assumed order mattered, so for the benchmark that is not stored in a binary blob, I made a separate table with ID and order columns. This way both versions captured the same information.

Good luck with your database inserts.

Fast Bulk Inserts into SQLite

Background

Sometimes it’s necessary to get information into a database quickly. SQLite[http://sqlite.org/] is a light weight database engine that can be easily embedded in applications. This will cover the process of optimizing bulk inserts into an SQLite database. While this article focuses on SQLite some of the techniques shown here will apply to other databases.

Naive Inserts

This is the most basic way to insert information into SQLite. It simply calls sqlite3_exec[http://www.sqlite.org/c3ref/exec.html] for each insert in the database.

[insert code here]

Inserts within a Transaction

A transaction is a way to group SQL statements together. If an error is encountered the ON CONFLICT statement can be used to handle that to your liking. Nothing will be written to the SQLite database until either END or COMMIT is encountered to signify the transaction should be written and closed.

[insert code here]

PRAGMA Statements

PRAGMA statements[http://sqlite.org/pragma.html] control the behavior of SQLite as a whole. They can be used to tweak options such as how often the data is flushed to disk of the size of the cache.

[insert code here]

Prepared Statements

Prepared statements[http://sqlite.org/c3ref/prepare.html] are the recommended way of sending queries to SQLite. Rather than parsing the statement over and over again, the parser only needs to be run once on the statement. In all honesty, the documentation for sqlite3_exec should say not to use it at all in favor of prepared statements. They are not only faster on inserts, but across the board for all SQL statements.

[insert cod here]

Storing Data as Binary Blob

Up until now, most of the optimizations have been pretty much the standard advice that you get when looking into bulk insert optimization. If you’re not running queries on some of the data, it’s possible to convert it to binary and store it as a blob. While it’s not advised to just throw everything into a blob and put it in the database, putting data that would be pulled and used together into a binary blob can make sense in some situations.

For example, if you have a point class (x, y, z) with REAL values, it might make sense to store them in a blob rather than three separate fields in row. That’s only if you don’t need to make queries on the data though. The benefits of this technique increase as more fields are converted into larger blobs.

[insert code here]

Note: I just do a memcpy here, but this would have issues going between big and little endian systems. If that’s necessary, it would be a good idea to serialize the data using a serialization library (ie – protocol buffers[http://code.google.com/apis/protocolbuffers/docs/overview.html], msgpack[http://msgpack.org/], thrift[http://incubator.apache.org/thrift/]).

Performance

Fast Bulk Inserts into SQLite

Background

Sometimes it’s necessary to get information into a database quickly. SQLite[http://sqlite.org/] is a light weight database engine that can be easily embedded in applications. This will cover the process of optimizing bulk inserts into an SQLite database. While this article focuses on SQLite some of the techniques shown here will apply to other databases.

Naive Inserts

This is the most basic way to insert information into SQLite. It simply calls sqlite3_exec[http://www.sqlite.org/c3ref/exec.html] for each insert in the database.

[insert code here]

Inserts within a Transaction

A transaction is a way to group SQL statements together. If an error is encountered the ON CONFLICT statement can be used to handle that to your liking. Nothing will be written to the SQLite database until either END or COMMIT is encountered to signify the transaction should be written and closed.

[insert code here]

PRAGMA Statements

PRAGMA statements[http://sqlite.org/pragma.html] control the behavior of SQLite as a whole. They can be used to tweak options such as how often the data is flushed to disk of the size of the cache.

[insert code here]

Prepared Statements

Prepared statements[http://sqlite.org/c3ref/prepare.html] are the recommended way of sending queries to SQLite. Rather than parsing the statement over and over again, the parser only needs to be run once on the statement. In all honesty, the documentation for sqlite3_exec should say not to use it at all in favor of prepared statements. They are not only faster on inserts, but across the board for all SQL statements.

[insert cod here]

Storing Data as Binary Blob

Up until now, most of the optimizations have been pretty much the standard advice that you get when looking into bulk insert optimization. If you’re not running queries on some of the data, it’s possible to convert it to binary and store it as a blob. While it’s not advised to just throw everything into a blob and put it in the database, putting data that would be pulled and used together into a binary blob can make sense in some situations.

For example, if you have a point class (x, y, z) with REAL values, it might make sense to store them in a blob rather than three separate fields in row. That’s only if you don’t need to make queries on the data though. The benefits of this technique increase as more fields are converted into larger blobs.

[insert code here]

Note: I just do a memcpy here, but this would have issues going between big and little endian systems. If that’s necessary, it would be a good idea to serialize the data using a serialization library (ie – protocol buffers[http://code.google.com/apis/protocolbuffers/docs/overview.html], msgpack[http://msgpack.org/], thrift[http://incubator.apache.org/thrift/]).

Performance

In: C++ · Tagged with: benchmarks, optimization, sqlite

on August 28, 2011 at 11:28 am